Wouldn’t it be great if you could bring Snowflake into the Oracle database ecosystem? Or, you may need to add Oracle database to the Snowflake ecosystem. What about pushing down the Oracle database ETL to the Snowflake layer? Or maybe, you have Oracle legacy system and you want to query Oracle data from Snowflake with all changes in data visible in real time. And finally, would you like to incorporate Snowflake data into Oracle PL/SQL ETL code?

Let’s take a look at 3 ways how to connect Oracle to Snowflake.

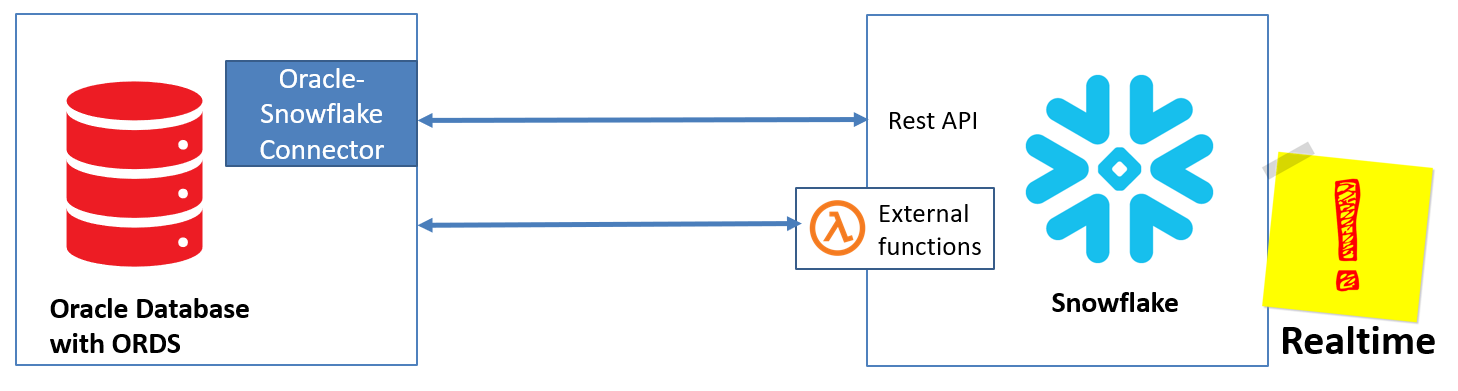

1st approach – Two-way communication between Snowflake and Oracle

Our Oracle-Snowflake Connector – provides a connection from Oracle to Snowflake

It’s a generic API tool developed by Database Consulting team enabling to connect from Oracle Database to the Snowflake Data Cloud. It allows querying, modifying the data as well as creating and changing objects on the Snowflake side without the need for an additional layer between Oracle database and Snowflake. It gives a clear window to incorporate data processing using state-of-the-art Snowflake solution as a part of the existing Data Warehousing processing or an enhancement to it. Oracle to Snowflake connector makes it possible to create POC comparing performance between running the logic on Snowflake and running it on Oracle without involving any additional tool. With a minimal effort, the existing code can be transformed into a version that enables running it on Snowflake instead of Oracle.

This connector is a PL/SQL package. It can be used to perform several database operations on Snowflake and Oracle datasets.

- Execute SQL statement on the Oracle side to retrieve Snowflake data

- Execute DML (update, delete, insert) on the Oracle side against Snowflake data

- Run Snowflake JavaScript stored procedure from Oracle Database

- Execute DDL (create, drop, alter) on the Oracle side against Snowflake objects.

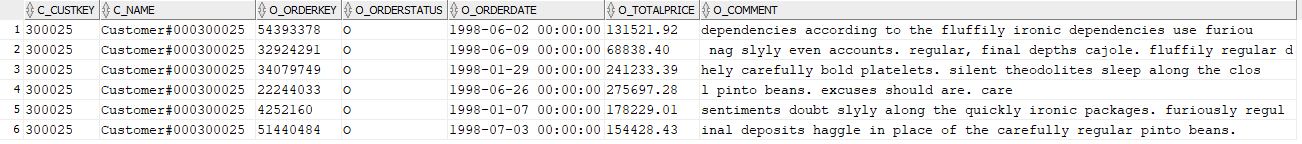

Example. SQL query executed on the Oracle side querying data from Snowflake

SELECT

json_value(column_value,'$[0]') AS c_custkey

,json_value(column_value,'$[1]') AS c_name

,json_value(column_value,'$[2]') AS o_orderkey

,json_value(column_value,'$[3]') AS o_orderstatus

,to_char(date'1970-01-01'+json_value(column_value,'$[4]')) AS o_orderdate

,json_value(column_value,'$[5]') AS o_totalprice

,json_value(column_value,'$[6]') AS o_comment

FROM table(datacons_sfconnector.query(q'[

SELECT

c_custkey,

c_name,

o_orderkey,

o_orderstatus,

o_orderdate,

o_totalprice,

o_comment

FROM snowflake_sample_data.tpch_sf10.orders o

JOIN snowflake_sample_data.tpch_sf10.customer c ON c_custkey = o_custkey

WHERE o_orderdate BETWEEN to_date(?,'YYYY-MM-DD')

AND to_date(?,'YYYY-MM-DD')

AND c_custkey = ?

]',json_key_list('1998-01-01','1998-12-31','300025')));

Read more at: https://datacons.co.uk/oracle-snowflake-connector/

In an upcoming blog post I will be happy to describe this way in further detail.

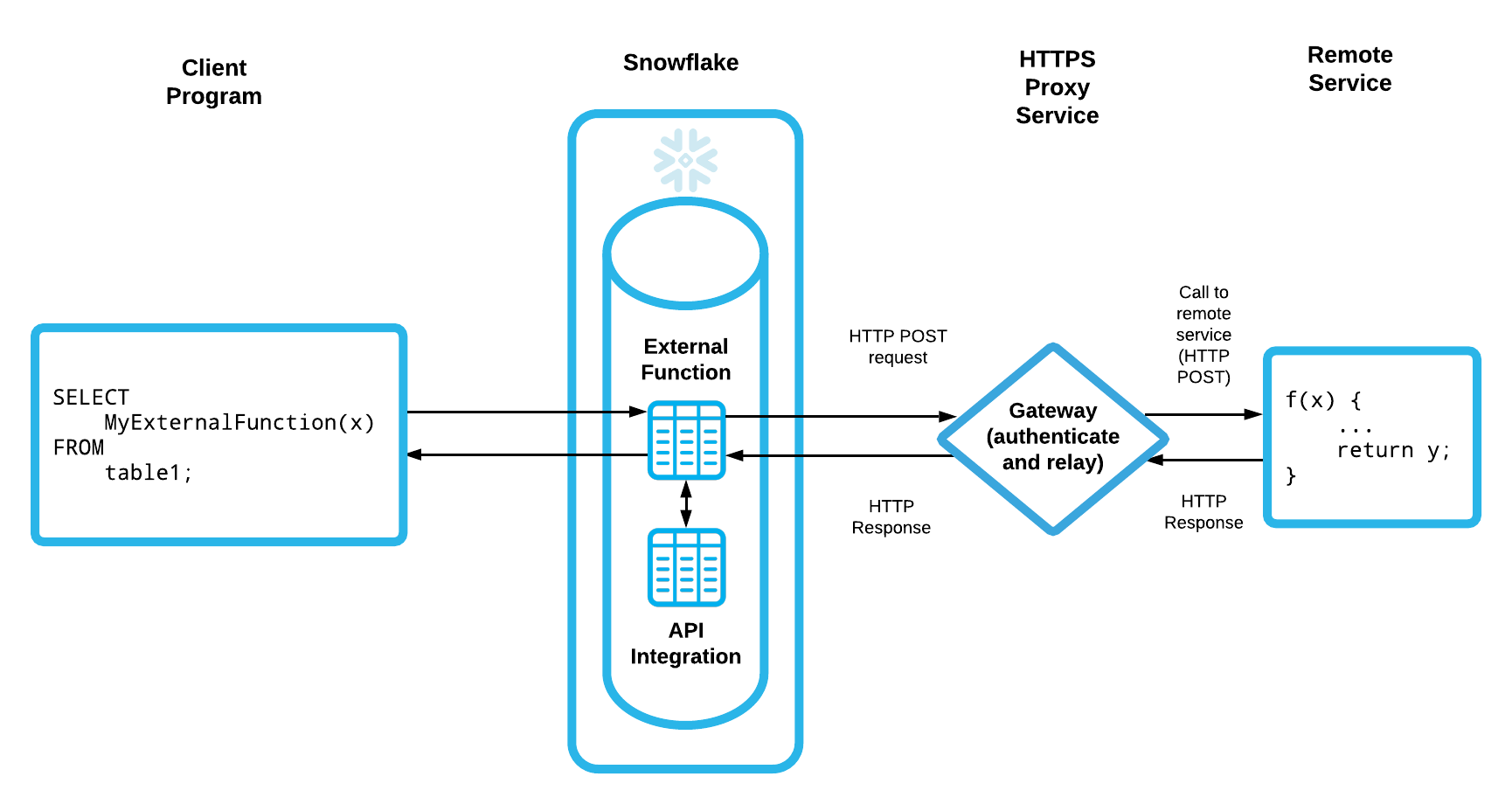

Lambda – External function – provides connection from Snowflake to Oracle

What about query Oracle tables and views directly from Snowflake? Let’s do it.

What do we need?

- Snowflake

- Oracle with ORDS

- Snowflake External function – calls code that is executed outside Snowflake

Source: https://docs.snowflake.com/en/sql-reference/external-functions-introduction.html

CREATE OR REPLACE API INTEGRATION my_api_integration_01

api_provider = aws_api_gateway

api_aws_role_arn = '……'

enabled = true

api_allowed_prefixes = ('https://…….amazonaws.com/………')

CREATE OR REPLACE EXTERNAL FUNCTION execute_immediate(sql VARCHAR)

RETURNS VARIANT

API_INTEGRATION = my_api_integration_01

AS 'https://….amazonaws.com/….';

- AWS Lambda – a serverless compute service that lets you run code without provisioning or managing servers

See our Lamba code at the end of this current blog post.

Let’s check how it works – execute in Snowflake:

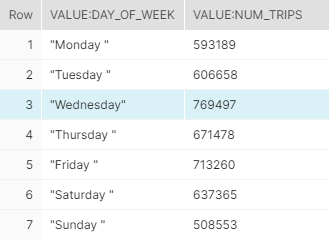

WITH cte AS(SELECT parse_json(execute_immediate('SELECT * FROM day_of_week_trips')):items[0]:resultSet:items AS v)

SELECT value:day_of_week

,value:num_trips

FROM cte,LATERAL FLATTEN(INPUT => v);

The result:

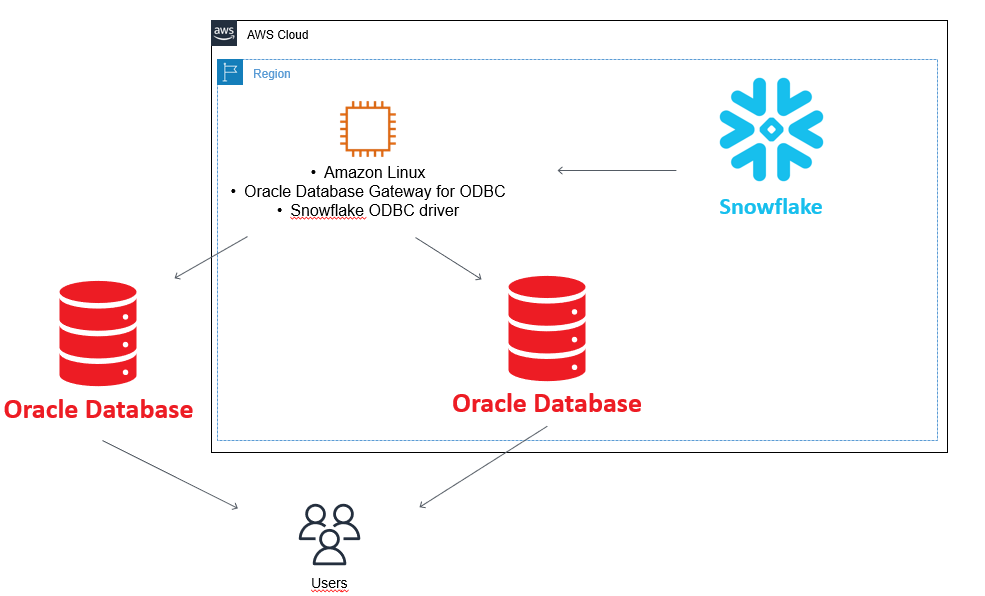

2nd approach – ODBC as a one-way communication from Oracle to Snowflake

Snowflake has an ODBC driver. Oracle supports heterogeneous services to allow data in a non-Oracle database to be queried using SQL. Please find the great and informative blog post from David Ruthven describing how to use ODBC: https://david-ruthven.medium.com/connect-oracle-to-the-snowflake-data-cloud-a984463cc970

Please take a look at the sample scenario. In our case we use a separate Gateway instance – in that way we are able to connect multiple Oracle databases to multiple Snowflake instances.

In our case Snowflake, Linux instance with ODBC and Gateway configured are based in the same AWS region.

Advantages:

- “Database Gateway for ODBC is included in all offerings” source

- You can query Snowflake data from Oracle using database links

- Pushing predicates into Snowflake side – partition pruning can be used

Disadvantages:

- Requires additional ODBC and Gateway configuration

- There are some performance challenges – some operations will be processed at the Oracle end

- The functionality is limited

In an forthcoming blog post I will dive into this.

3rd approach – Gluent’s transparent data connector for Snowflake

With Gluent Data Platform 4.1, customers can migrate Oracle databases to Snowflake easily without having to write ETL flows or rewrite any existing applications. Read more: https://gluent.com/gluent-4-1-release-adds-snowflake-and-cloudera-public-cloud-support/

Stay tuned folks for more pearls of wisdom 🙂

Appendix:

Lamba – Node.js code:

var querystring = require('querystring');

var https = require('https');

var ORDSHost = 'XXX.XXX.XXX.XXX'; //IP of Oracle instance with ORDS configured

var username = 'SAMPLESCHEMA'; //schema name

var password = '*************'; //password for schema

function ORDSSQLRequest(username, password, host, sql) {

return new Promise((resolve, reject) => {

var dataString = sql;

var headers = {};

var endpoint = 'https://' + host + '/ords/dev/_/sql';

headers = {

'Content-Type': 'application/sql',

'Authorization': 'Basic ' + Buffer.from(username + ':' + password).toString('base64'),

'Content-Length': dataString.length

};

var options = {

host: host,

path: endpoint,

method: 'POST',

headers: headers

};

process.env["NODE_TLS_REJECT_UNAUTHORIZED"] = 0;

var req = https.request(options, function(res) {

res.setEncoding('utf-8');

var responseString = '';

res.on('data', function(data) {

responseString += data;

});

res.on('end', function() {

resolve(responseString);

});

});

req.write(dataString);

req.end();

});

}

exports.handler = async (event) => {

var result = [];

for (var i = 0; i < event.data.length; i++) {

result[i] = ORDSSQLRequest(username, password, ORDSHost, event.data[i][1]);

}

await Promise.all(result);

for (var i = 0; i < result.length; i++) {

result[i] = [i, await result[i]];

}

const response = {

statusCode: 200,

data: result

};

return response;

};